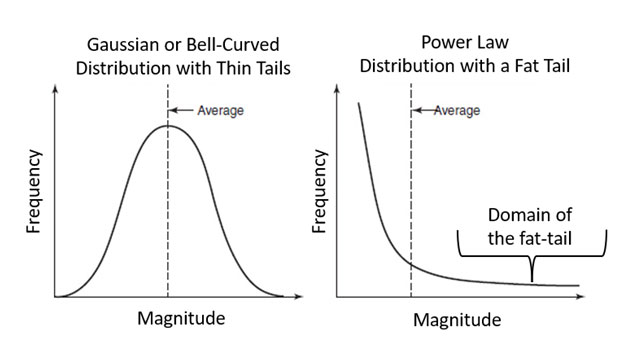

From a risk perspective we live in two statistical worlds – one that is relatively comfortable to live in that could be called the Gaussian World, named after the German mathematician Johann Carl Friedrich Gauss (1777-1855). This is a world where problems are well defined, statistical distributions are well represented by normal (also called bell-curved or Gaussian) distributions, where averages, totals and standard deviations are meaningful, where most data points lie near the average, where cost-benefit analyses provide good guidance for decision-making, and the world is predictable in a probabilistic sense. It is also a world that feels fairly equitable, since there are relatively few outliers.

Then there is another statistical world – one that is quite uncomfortable. This is the world where statistical distributions do not look like bell curves, but rather have what are called fat tails (often well represented by power laws) – where extreme events occur with disturbing frequency. In this world averages and standard deviations are not meaningful, most data points lie nowhere near the average, cost-benefit analyses lack robustness at best and may be tragically misleading at worst, and the world is not predictable in a probabilistic sense. I will call this the Fat-Tailed World. Being fat-tailed means that there are very many small events but also occasional extreme ones not present in Gaussian distributions. Unlike the Gaussian world it does not feel equitable, since resources and impacts are very much affected by outliers. Whereas egalitarians and communitarians would tend to like the Gaussian world, the Fat-Tailed World is likely preferred by Social Darwinists who tend to look to natural law to morally justify the enormous success of some people or institutions co-existing with vast numbers of the poor and vulnerable.

We live in both of these worlds simultaneously, yet for many of us the mental constructs used to frame disaster risk are primarily, or only, based upon the former – the Gaussian World. But as noted by Nassim Nicholas Taleb “Ruin is more likely to come from a single extreme event than from a series of bad episodes” (Taleb, 2019); the Fat-Tailed World is ignored at our great peril.

That disasters are a fat-tailed problem is one of the underlying reasons why disaster losses have been escalating over the past century– our systems are better designed for emergencies than they are for disasters, which are both quantitatively and qualitatively different (Quarantelli, 2006), and it is likely that some trends in hazard (e.g., climate change), vulnerability (e.g., divisions of wealth) and exposure (e.g., urbanization) are making some fat tails fatter. Disasters and catastrophes, not emergencies, are the level of events that requires our greatest attention if we are to avoid ruinous outcomes.

Figure 1 visually illustrates the difference between power laws and Gaussian distributions. Power laws have a single tail whereas Gaussian distributions have two tails. The tail of a power law is fatter than that of the Gaussian distribution at large values, which as previously mentioned means extreme events are more common. The fat-tailed world is sometimes called the “Paretian World”. The Pareto principle was first stated by the Italian economist Vilfredo Pareto in 1896, who observed that 80% of the land in Italy was owned by only 20% of the population. He also witnessed this happening with plants in his garden—20% of his plants were bearing 80% of the fruit. This is one outcome of power laws and has been generalized to the Pareto Rule, which states that 80% of outcomes result from 20% of actions, or 80% of impacts result from 20% of events (of course that is not always exactly true even for fat-tailed distributions – it can be greater or smaller).

One example of this is wildfires in Canada. As of 2022 the Canadian Disaster Database maintained by Public Safety Canada lists 98 wildfire events, 70 of which have a financial damage cost associated with them. Of those 70 events a single one, the Fort McMurray fire of 2016, accounts for 70% of the cumulative cost of all 70 fires. That one fire dramatically changed the empirical risk profile of wildfires in Canada; this is the type of event that occurs with disturbing frequency in fat-tailed distributions but is virtually absent in Gaussian distributions. Recognizing that disasters are fat-tailed calls for a fundamentally different perspective on risk, and how to both calculate and manage it. In a Gaussian world our risk tolerance can be greater; in a fat-tailed world a non-zero risk can eventually result in ruin.

To illustrate the difference between a Gaussian world and Fat-Tailed World, consider the following. I live in an area where we are sometimes visited by racoons. The average racoon weighs between 10-15 lbs. Their weight distribution is approximately Gaussian, which means that I don’t have to worry very much about being attacked by a racoon if I go out for an evening walk – I am simply far bigger than they are. If the weight distribution of racoons were fat-tailed instead of Gaussian then the vast majority of the ones I encounter would be under 10 lb.(less than the mean), but every once in a while I would stumble across a 50-100 lb. (or larger) racoon – a very scary thought! My risk assessment would have to be dramatically different. In the wildfires example previously discussed the Fort McMurray event cost about 50 times the cost of the average of the 70events. If this ratio were applied to the racoon thought experiment, then a Fort McMurray scale anomaly would result in a racoon weighing between 500 and 750 lbs.

In a nutshell, from a risk perspective, this is the difference between Gaussian and Fat-Tailed Worlds. One need not worry about mega-sized racoons, but should about mega-sized disasters. Unfortunately, we don’t worry enough…

References:

Taleb, N.N. (2019). Probability, Risk, and Extremes. In Needham, D. and Weitzdörfer, J. (editors)Extremes, pp. 46 – 66, Cambridge University Press, (https://www.cambridge.org/core/books/abs/extremes/probability-risk-and-extremes/B4232F73B536B2AFD57A148208B08AB7)

Quarantelli, E. L. (2006). Catastrophes are different from disasters: Some implications for crisis planning and managing drawn from Katrina. Understanding Katrina: Perspectives from the social sciences.